Table Of Contents

How to recreate MS Access primary keys and indexes in PG Intermediate

How to map MS Access auto number to PostgreSQL serial Intermediate

PostgreSQL 9.3 Lateral Part 1: Use with HStore

PostgreSQL 9.2 windows binaries for file_textarray_fdw

From the Editors

PostGIS in Action 2nd Edition reached MEAP

Just a heads up, the Second Edition of PostGIS In Action has officially reached MEAP stage meaning you can buy now and get draft chapters as we write them and get final copy when released. Have first drafts of 5 chapters so far. And many more coming shortly.

It is currently the Manning Deal of the Day

January 22, 2013

PostGIS in Action

Second Edition

Get half off the MEAP eBook

or MEAP pBook

Enter pgislaunchau in the Promotional Code box when you check out.

http://www.manning.com/obe2

With purchase you also get the E-Book copy of the first edition.

What's new and upcoming in PostgreSQL

PostgreSQL 9.3 Lateral Part2: The Lateral Left Join Intermediate

In the last article we said you can't have a LEFT JOIN with LATERAL. Turns out we were mistaken and YES indeed you can and when you do it is equivalent or more powerful than SQL Server's OUTER APPLY.

Let us say that in our query we wanted all my zips returned but for ones that had keys where the value is in a certain range, we want those keys returned. we'd do this. The fact we need all even if they have no such keys necessitates us putting the condition in the ON rather than the WHERE.

SELECT zip, (h).key, (h).value As val

FROM zcta5 AS foo

LEFT JOIN LATERAL each(hstore(foo) - 'zip'::text) As h

ON ((h).value BETWEEN '12345' and '14567')

ORDER BY zip

limit 5;

Output would be

zip | key | val ------+--------+---------- 00601 | | 00602 | | 00603 | | 00606 | awater | 12487.00 00610 | hu10 | 12618

PostgreSQL Q & A

How to bulk export tables from MS Access Intermediate

Despite all the nasty things people say about MS Access, it does have fairly database agnostic, easy to use import and export tools and a not too shabby query wizard and query tool. But of course, it's not a server side database so at a certain point won't scale for database storage. You can still use it as a front-end to a server-side database such as PostgreSQL or SQL Server. So once you outgrow it for data storage, you'll probably want a quick way to export your data out. Unfortunately, well at least in the 2003 version, while you can bulk link tables from an ODBC connection, you can only export one table at a time using the "select table" right-click export. In this article we'll show a quick and dirty export MS Access Visual basic sub routine we use to export all tables. This we've only tested on MS Access 2003, so if you are using higher, you might need to customize it a bit. This script should work fine for exporting to any database connection with modification of the connection string.

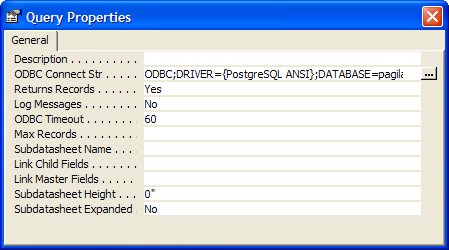

Figuring out the connection string to put in

To figure out what connection string to put in for any kind of database (not just PostgreSQL), follow these steps

- Create a File DSN

- Create a query in design view

- Go to Query->SQL Specific->Pass-through:

- Click properties and the ... and pick the DSN you created in 1.

- Your connection string is what shows in the ODBC field.

VB Subroutine to export all your tables

Here is the script we use. You can replace the whole connection string parameter with what you got from SQL-Pass Thru, but this is what ours looks like using the free ODBC PostgreSQL driver you can download from http://www.postgresql.org/ftp/odbc/versions/msi/.

Sub modExportToPG()

Dim rs As DAO.Recordset

'We only want to export physical user defined visible and hidden tables

Set rs = CurrentDb.OpenRecordset("SELECT Name " & _

" FROM MSysObjects " & _

" WHERE Type=1 AND Flags < 9 ORDER BY Name;")

Do Until rs.EOF

'export the tables but export as lower case table names

DoCmd.TransferDatabase acExport, "ODBC Database" _

, "ODBC;DRIVER={PostgreSQL Unicode};DATABASE=mydb;SERVER=myserver;PORT=5432;UID=myuser;PWD=mypwd" _

, acTable, rs("Name"), LCase(rs("Name"))

rs.MoveNext

Loop

rs.Close

End Sub

Caveats

There are a couple of things not ideal with MS Access export logic:

- the casing of the fields when exported is preserved and this can be incredibly annoying since it requires quoting all the fields to use them in PostgreSQL. To fix this issue, we use the approach we described in Lowercasing table and column names

- For really huge tables in the millions of records, we usually don't use this approach because MS Access tries to register a transaction and the back and forth is really slow, so for huge tables we export to some sort of delimeted format and then import with psql or PostgreSQL SQL COPY or FDW.

- MS Access generally does a good job of mapping field types e.g Yes/No become boolean in PostgreSQL. However autonumber fields do not become serial keys automatically in PostgreSQL. We'll demonstrate how to create sequences and bind them (effectively making them serials) in PostgreSQL.

- MS Access export doesn't recreate the primary keys, indexes, and foreign key constraints. We'll demonstrate how to generate an SQL script the recreation of the primary key and index keys from using a VB subroutine that scans the MS Access tables in the next article.

PostgreSQL Q & A

How to recreate MS Access primary keys and indexes in PG Intermediate

This exercise is a continuation of our How to bulk export tables from MS Access. Now while this approach will work for other databases besides PostgreSQL, you'll probably need to fiddle with the subroutine to make it work for some other databases. PostgreSQL is fairly ANSI-SQL standard so not too much fiddling should be required to port to SQL Server, MySQL, Oracle etc.

Unlike the prior Visual basic subroutine we showed that exports the tables, this just creates an SQL script that you run on the already created PostgreSQL database that contains the exported data. We didn't test the quote option though we coded it in the subroutine, since like we said we hate having to quote fields. If perchance you are one of those folks that likes to put spaces in your field names to make it more englishy, then you'll need to quote or revise the other routine to convert your spaces to _ or some other thing.

Generate SQL script to make the keys

This routine should be saved in a module in the database you exported data from similar to the other routine. It creates a file on the C drive. Fill free to rename or even revise the sub routine to take it in as an argument.

Sub buildkeysInPG(Optional quoteFields As Boolean = False)

Dim db As Database

Dim tdf As TableDef

Dim fld As DAO.Field

Dim ndx As DAO.Index

Dim strSQL As String

Dim strFlds As String

Dim fs, f

Dim delimStart As String

Dim delimEnd As String

If quoteFields Then

delimStart = """"

delimEnd = """"

Else

delimStart = ""

delimEnd = ""

End If

Set db = CurrentDb

Set fs = CreateObject("Scripting.FileSystemObject")

'-- Change this path to where you want script generated

Set f = fs.CreateTextFile("C:\pgindexes.sql")

For Each tdf In db.TableDefs

'-- No system tables or temp tables

If Left(tdf.Name, 4) <> "Msys" And Left(tdf.Name, 1) <> "~" Then

'Indexes and Primary Keys

strSQL = ""

For Each ndx In tdf.Indexes

If ndx.Primary Then

'-- MS Access has a habit of calling all primary keys PrimaryKey

'-- if you do not explicitly name them

'-- and key and index names have to be

'-- unique in PostgreSQL within each schema

'-- so lets just name it after the table: pk_tablename

strSQL = "ALTER TABLE " & delimStart & tdf.Name & delimEnd & " ADD CONSTRAINT " & _

delimStart & "pk_" & tdf.Name & delimEnd & " PRIMARY KEY"

Else

If ndx.Unique Then

strSQL = "CREATE UNIQUE INDEX "

Else

strSQL = "CREATE INDEX "

End If

'-- Get rid of spaces in index names replace with underscore

'-- , prefix table name in index name

'-- because names need to be unique in postgresql

strSQL = strSQL & delimStart & _

"idx_" & tdf.Name & "_" & Replace(ndx.Name, " ", "_") & delimEnd & " ON " & _

delimStart & tdf.Name & delimEnd

End If

strSQL = strSQL & " ("

strFlds = ""

For Each fld In ndx.Fields

'-- Loop thru the fields of index so this handles compound keys

strFlds = strFlds & "," & delimStart & fld.Name & delimEnd

Next

'-- Get rid of dangling , we introduced

strSQL = strSQL & Mid(strFlds, 2) & ") "

f.WriteLine strSQL & ";" & vbCrLf

Next

End If

Next

f.Close

End Sub

PostgreSQL Q & A

How to map MS Access auto number to PostgreSQL serial Intermediate

This exercise is a continuation of our How to bulk export tables from MS Access and How to recreate MS Access primary keys and indexes in PostgreSQL. As mentioned in the first article, when you use the built-in export feature of MS Access, it exports autonumber fields as integers instead of the more appropriate PostgreSQL pseudo serial type.

The serial type in PostgreSQL is not really a type though, so this behavior is expected. The serial type is really short-hand for defining an integer column and a corresponding sequence object

with a specific naming convention and setting the default value to the next value of the sequence. So this is what we will do in this exercise after we have already exported our data to PostgreSQL. The script we are about to demonstrate will generate an sQL script containing all the CREATE SEQUENCE, and ALTER TABLE ALTER COLUMN SET DEFAULT .. needed to convert our integer column to a serial column.

As a side note, Mark mentioned a similar approach to what we've been describing here, but builds all the table , key structures utilizing python. You can check out the python script at http://code.activestate.com/recipes/52267-reverse-engineer-ms-accessjet-databases

Generate CREATE SEQUENCE ALTER TABLE from MS Access table definition

Sub buildsequencesInPG(Optional quoteFields As Boolean = False)

Dim db As Database

Dim tdf As TableDef

Dim fld As DAO.Field

Dim strSQL As String

Dim fs, f

Dim delimStart As String

Dim delimEnd As String

If quoteFields Then

delimStart = """"

delimEnd = """"

Else

delimStart = ""

delimEnd = ""

End If

Set db = CurrentDb

Set fs = CreateObject("Scripting.FileSystemObject")

'-- change this to where you want the file created

Set f = fs.CreateTextFile("C:\pgsequences.sql")

For Each tdf In db.TableDefs

'-- No system tables or temp tables

If Left(tdf.Name, 4) <> "Msys" And Left(tdf.Name, 1) <> "~" Then

'-- loop thru field set of table looking for autonumber fields

For Each fld In tdf.Fields

strFlds = strFlds & ",[" & fld.Name & "] "

strSQL = ""

If fld.Type = dbLong Then

If (fld.Attributes And dbAutoIncrField) = 0& Then

'it is not an autonumber field

Else

'-- Create a postgresql sequence object

'-- with start being one after the max value of the current column value

'-- we name the sequence in convention tablename_field_seq

'-- so that PostgreSQL will display as a serial

strSQL = "CREATE SEQUENCE " & delimStart & tdf.Name & "_" & fld.Name & "_seq" & delimEnd & _

" START " & (Nz(DMax(fld.Name, tdf.Name), 0) + 1) & "; "

' -- set table column default to next value of sequence

' -- this effectively makes it an autonumber

strSQL = strSQL & "ALTER TABLE " & delimStart & tdf.Name & delimEnd & _

" ALTER COLUMN " & delimStart & fld.Name & delimEnd & _

" SET DEFAULT nextval('" & delimStart & tdf.Name & "_" & fld.Name & "_seq" & delimEnd & "'::regclass);"

f.WriteLine strSQL & vbCrLf

End If

End If

Next

End If

Next

f.Close

End Sub

Using PostgreSQL Extensions

Unpivoting data in PostgreSQL

A while ago we demonstrated how to create cross tabulation tables using tablefunc extension aka (Pivot Tables) (basically collapsing rows into columns). Recently someone asked me how to do the reverse (convert columns to rows). He found a solution to the problem here: http://stackoverflow.com/questions/1128737/unpivot-and-postgresql using a combination of array and unnest. That approach is very similar to SQL Server's built-in Pivot SQL predicate. The solution seemed nice enough except similar to the SQL Server Unpivot, it required knowing the column names beforehand so very hard to genericize. So would it be possible to accomplish this feat without knowing the columns names (except for the key) and be able to do it with one SQL statement. I realized that the PostgreSQL hstore extension fit the bill nicely. In this article I'll demonstrate both approaches by creating a view using both.

Installing Hstore

If you don't have hstore installed and are running PostgreSQL 9.1+, you can use the handy

CREATE EXTENSION hstore;For lower versions, look for the hstore.sql file in share/contrib and run in your database.

Load Our data

For this exercise, I'm going to grab data the Gazetteer zipcode tabulation areas http://www.census.gov/geo/maps-data/data/gazetteer2010.html

First create table to hold the data:

CREATE TABLE zcta5 (zip char(5) primary key, pop10 integer, hu10 integer

, aland numeric(16,2), awater numeric(16,2)

, aland_sqmi numeric(16,2), awater_sqmi numeric(16,2)

, intptlong numeric(8,5), intptlat numeric(8,5));

Load the data with psql:

--in psql

\cd /Gaz_zcta_national

\copy zcta5 FROM Gaz_zcta_national.txt DELIMITER E'\t' CSV HEADER

The Hstore unpivot view

We create a view that renders our table unpivoted:

CREATE VIEW vw_zcta_unpivot_hs

AS

SELECT zip, (h).key, (h).value As val

FROM (SELECT zip, each(hstore(foo) - 'zip'::text) As h

FROM zcta5 as foo ) As unpiv ;

Take it for a test drive by outputting just one zip

--10 ms uses index too

-- returns 8 rows

SELECT * FROM vw_zcta_unpivot_hs WHERE zip = '02109';

zip | key | val

-------+-------------+-----------

02109 | hu10 | 2462

02109 | aland | 449654.00

02109 | pop10 | 3771

02109 | awater | 292691.00

02109 | intptlat | -71.05063

02109 | intptlong | 42.36722

02109 | aland_sqmi | 0.17

02109 | awater_sqmi | 0.11--output the whole thing 3,360ms (264960 records) --SELECT * from vw_zcta_unpivot_hs;-- no network effects-- -- 290msSELECT count(*) from vw_zcta_unpivot_hs;

The thing that is kind of cool about this approach is that if I add another column to my table, I don't need to redo the view for it to output that new column as a row. To demonstrate

ALTER TABLE zcta5 ADD COLUMN hu_income10 numeric(12,2);

SELECT * FROM vw_zcta_unpivot_hs WHERE zip = '02109';

zip | key | val

-------+-------------+-----------

02109 | hu10 | 2462

02109 | aland | 449654.00

02109 | pop10 | 3771

02109 | awater | 292691.00

02109 | intptlat | -71.05063

02109 | intptlong | 42.36722

02109 | aland_sqmi | 0.17

02109 | awater_sqmi | 0.11

02109 | hu_income10 |

The array unpivot view

The second approach is to use array and unnest. As mentioned, the thing I didn't like about this approach is that it's not dynamic - you have to itemize the columns. It also on the downside requires you to cast all the column values before hand if they are not all the same type. It does have a couple of benefits:

- It uses built-in types so no need to install an extension though it requires PostgreSQL 8.4+ because of unnest

- You can grab just a subset very efficiently and if you only care about numeric columns would probably be best.

- It's generally faster.

To test performance I ran this:

Create the view

CREATE OR REPLACE VIEW vw_zcta_unpivot_ary

AS

SELECT zip

, unnest(

array['hu10', 'aland', 'pop10'

, 'awater', 'intptlat', 'intptlong', 'aland_sqmi', 'awater_sqmi']

) AS key

, unnest(

array[hu10::text, aland::text, pop10::text

, awater::text, intptlat::text, intptlong::text

, aland_sqmi::text, awater_sqmi::text]

) AS val

FROM zcta5;

Test some queries

The single record one takes same time and uses index but shorter plan:

--where filter 10 ms --

SELECT *

FROM vw_zcta_unpivot_ary

WHERE zip = '02109';

Output the whole view

-- network effects --

-- 3120 ms (264960 records)

SELECT * from vw_zcta_unpivot_ary; -- no network effects --

-- 200 ms

SELECT count(*) FROM vw_zcta_unpivot_ary;So there you have it, two very different approaches for accomplishing the same task.

Using PostgreSQL Extensions

PostgreSQL 9.3 Lateral Part 1: Use with HStore

One of the improvements coming in PostgreSQL 9.3 is the new LATERAL SQL clause. LATERAL allows you to write more

succinct code than you would be able to otherwise and will be a welcome companion to extensions like hstore and PostGIS which both

have a plethora of set returning functions. In this article, I'll just demonstrate it's use with hstore and subsequent I'll talk

about it's potential use in PostGIS raster,geometry, and topology for exploding subelements.

One thing I was very interested in aside from the succinctness is whether it will be more performant than the older approach. A perfect test case for hstore would be the example we just demonstrated in Unpivoting data in PostgreSQL. For this example, we'll rewrite the hstore view using LATERAL instead of employing a subselect.

Our original hstore view

In Pre-9.3, we'd write the view as we saw in the prior article:

CREATE OR REPLACE VIEW vw_zcta_unpivot_hs

AS

SELECT zip, (h).key, (h).value As val

FROM (SELECT zip, each(hstore(foo) - 'zip'::text) As h

FROM zcta5 as foo ) As unpiv ;

What is all of that cryptic mumbo jumbo?

The reason for the subselect instead of doing (each(..)).* is to prevent the each function from being called multiple times.

For those who were confused by the - 'zip'::text idiom, one of the neat features of PostgreSQL is that it allows types to define their own

implementations of operators. The hstore function defines it's own minus - operator

to mean remove a key or set of keys from the list. If you subtract a string it will remove all key-val where the key is the string you passed in.

If you wanted to remove multiple elements, you'd do something like hstore - array['zip', 'hu10']::text[].

The each function is a function packaged with hstore that explodes an hstore object into a set of key/value pairs.

The hstore function, also packaged with hstore, takes many forms and the one we are using here, takes a row object and converts it to an hstore.

Rewriting the view with LATERAL

In 9.3, we can rewrite our view using LATERAL, but will it perform better? Here is the view rewritten using LATERAL.

CREATE OR REPLACE VIEW vw_zcta_unpivot_hs_lateral

AS

SELECT zip, (h).key, (h).value As val

FROM zcta5 AS foo

CROSS JOIN LATERAL each(hstore(foo) - 'zip'::text) As h;

People may laugh for our explicit use of CROSS JOIN when a , would suffice. Sadly the new LATERAL

clause does not support LEFT JOIN which would be a very welcome addition, and this is just a reminder that it doesn't. For this case there is no

need, but there are many where LATERAL is still not an option if you need the LEFT side returned even if no matching records in the LATERAL.

As we can see, this version of the view is slighly shorter than the original, and would have been shorter if we weren't so anal about writing out CROSS JOIN. But is it faster?

I was hoping the speed would be as good or even better than the old approach, but sadly it was slightly worse. Difference in speed on my test 9.3 postgres instance -- old approach (250 ms), lateral approach (305 ms) -- this is doing a full explain analyze verbose (so without network effects). Index is still used if you do specific zip filter, and is about same speed as old approach (10ms).

There is hope

Well PostgreSQL 9.3 is not out yet so there is still hope that performance will be improved and LEFT JOIN or equivalent

will be supported to rival the SQL Server .OUTER APPLY clause

Using PostgreSQL Extensions

PostgreSQL 9.2 windows binaries for file_textarray_fdw

We discussed a while back the Text array foreign data wrapper that allows you to register and query a delimited file as if it were a regular table with one array column. It's probably the FDW we use most often and reminded of that recently when I had to query a 500,000 record resident list tab delimited file to prep for geocoding.

When we upgraded to 9.2 and we could no longer compile, I wrote to Andrew Dunstan about this and he kindly created a 9.2 version. Unfortunately there are still quite a few FDWs broken as a result of the 9.2 changes and I was hoping to try to apply similar patches to them that I saw Andrew do, but haven't had the patience or time yet. Anyway we've compiled these for 9.2 under our mingw64-w64 and mingw64-w32 chains using Andrew's 9.2 GitHub stable branch. https://github.com/adunstan/file_text_array_fdw/tree/REL9_2_STABLE and we've tested them using the PostgreSQL EDB windows VC++ compiled versions. We hope you find them as useful as we have.

I'm hoping to add more FDWs to these 9.2 bags once we have those working again. If you want to compile yourself or compile others, we have instructions in the packaged README.txt.